What it is

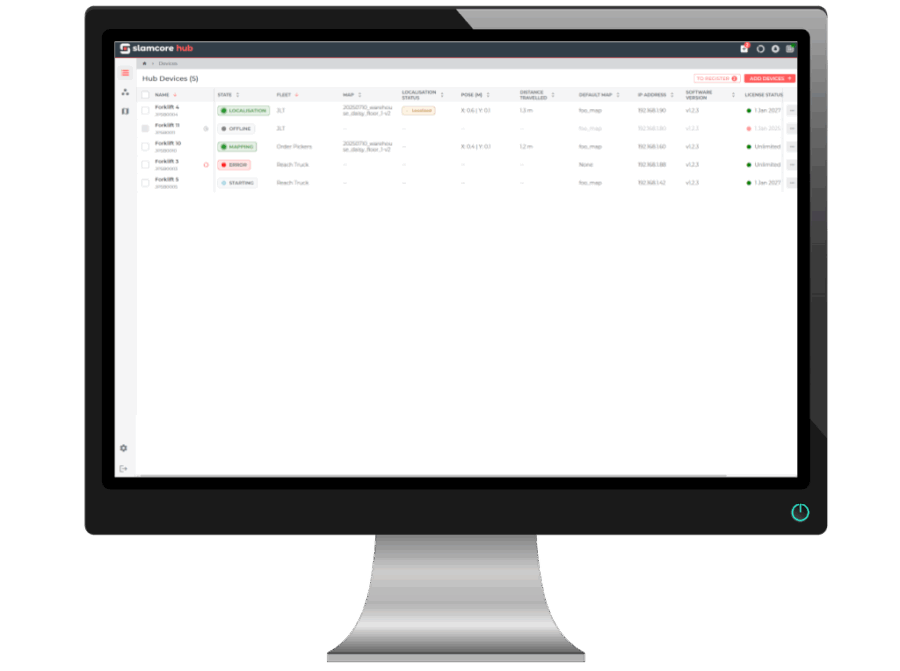

A retrofit compute and camera module that streams live location and object data (position and orientation). Delivering RTLS without beacons or extra infrastructure required.

Fits on

Forklifts, reach-trucks, and tuggers.

Who it’s for

• System Integrators

• Value Added Resellers

• Operations & Facilities managers

• Safety & Productivity Teams

• Business Intelligence & Analytics Teams

• Forklift OEMs

Availability

Shipping now.