Unleash the power of spatial intelligence

Bring ideas to life faster with visual SLAM for positioning, perception and mapping

The power of Slamcore

Humans use senses and context to orient themselves, powered by the brain. In the machine world, robots need novel algorithms designed and optimized for embedded performance.

SLAM helps robots and other devices understand their surroundings by calculating their position and orientation relative to the world around them – while simultaneously creating a map of the surroundings.

Our visual SLAM uses data from a stereo camera and can fuse it with additional data from other sensors to create reliable, accurate, and robust spatial intelligence that devices can use to understand their surroundings, location, and next move.

How Slamcore makes it happen

Position

Where am I?For robots to make better decisions as they move through space, they need to know where they are.

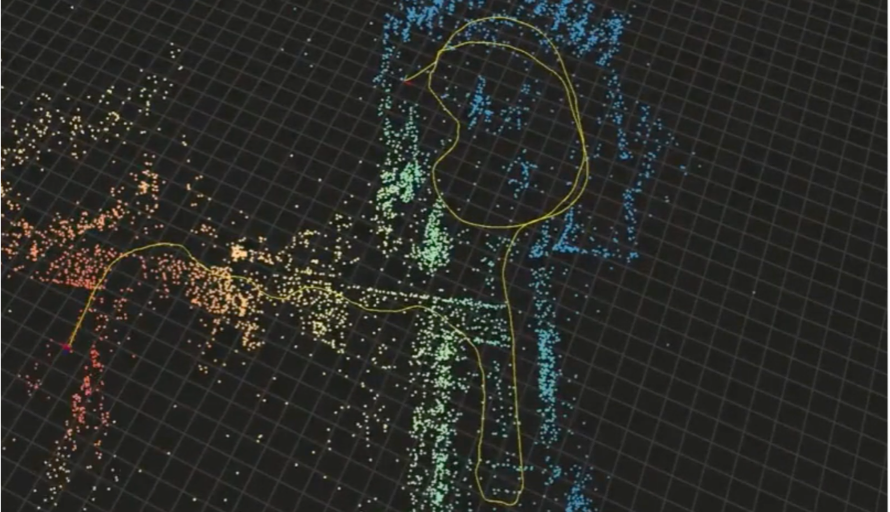

Our visual-inertial SLAM software processes images from a stereo camera, detecting notable features in the environment which are used to understand where the camera is in space.

Those features are saved to a 3D sparse map which can be used to relocalize over multiple sessions or share with other vision-based products in the same space.

Drift in the position estimate is constantly accounted for by comparing all previous measurements to the live view and triggering corrections as locations are revisited, a process known as loop closure.

Our robust feature detectors along with intelligent AI models used in our SLAM pipeline ensure accurate positioning even in challenging dynamic and changing environments.

Perceive

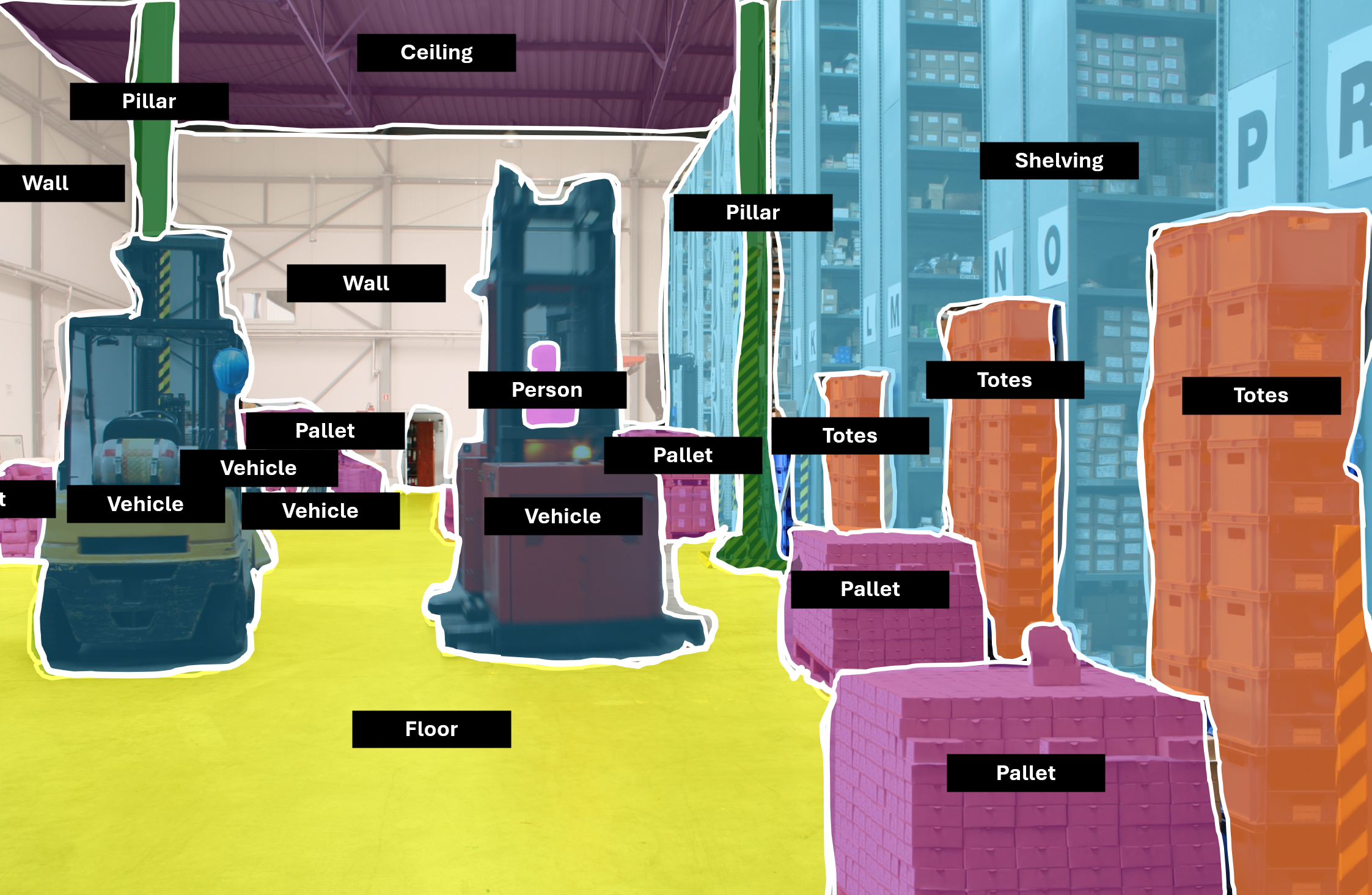

What are the objects around me?Object detection and semantic segmentation are integrated directly into our positioning pipeline and give your robot a sense of what it’s actually seeing.

This information can be used to improve position estimates by ignoring measurements against dynamic objects or to enhance obstacle detection by enabling different navigation behaviours depending on the detected obstacle class.

Map

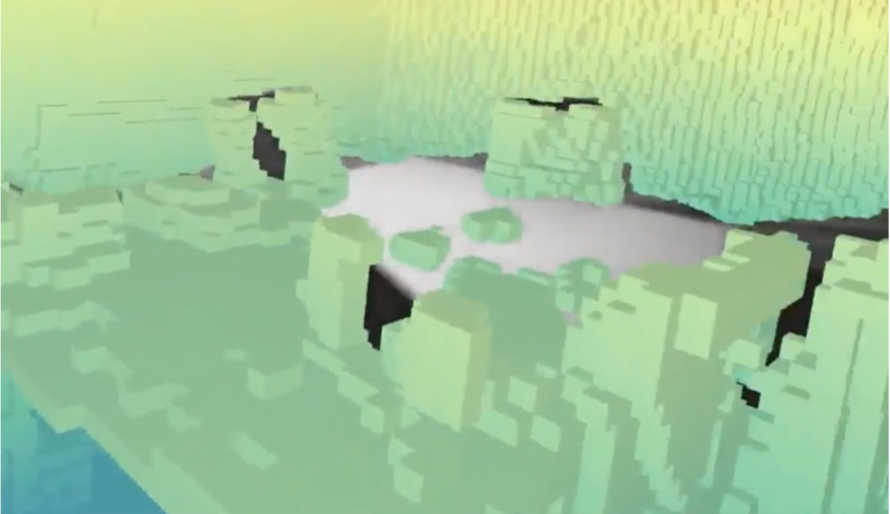

What’s around me?Depth data from a stereo camera, time of flight sensor or LIDAR helps us understand how far away physical objects are.

With this information, your robot can build an occupancy height map, giving it a full 2D or 2.5D representation of its environment, which can be used for autonomous navigation.

When all three levels of SLAM – localization, semantic understanding and mapping – combine, we get full-stack spatial intelligence, providing machines with complete spatial understanding.