This is the first installment in a three part series that explores the levels of SLAM compatibility that form part of our full-stack SLAM algorithm.

Simultaneous Localization and Mapping (SLAM) is the crucial science that enables robots, autonomous machines, and VR/AR wearables to understand where they are in a physical space. As specialists in this area, we aim to offer designers and developers a shortcut to implementing highly accurate, robust, and cost-effective solutions to overcome this challenging problem at a commercial scale.

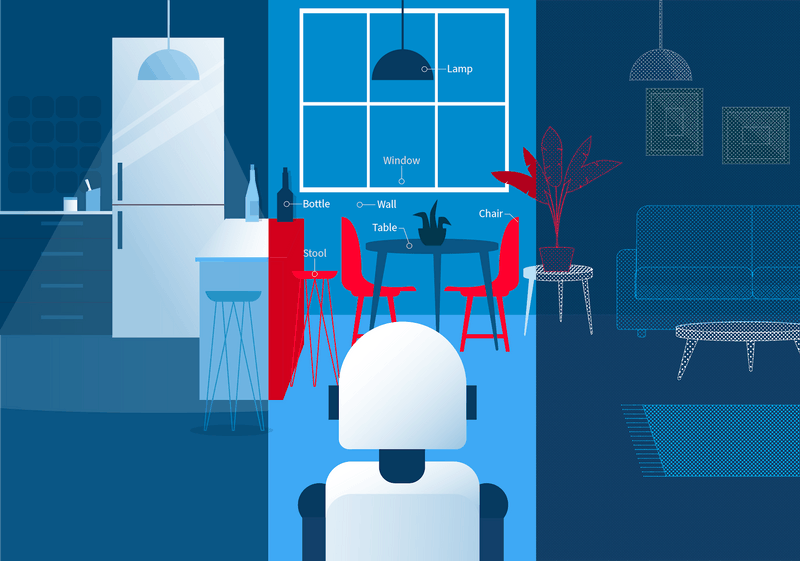

Imagine waking up in a strange room that you’ve never visited before. Your immediate response is to ‘get your bearings.’ What can you see around you? Where are the walls and the floor? What other; furniture, people, and objects, occupy the space? Using our eyes and the balance and motion-sensing elements of our ears, we quickly understand the shape of the area around us and our position relative to things.

Slamcore’s Visual Inertial SLAM algorithms allow robots and other autonomous devices to do the same. Using two low-cost cameras (similar to those found on even the most basic of smartphones) plus an inertia measurement unit (that senses movement and orientation), we give robots the ability to ‘get their bearings’ in new and unknown physical spaces.

While many other SLAM solutions exist, many of the same challenges present themselves. While generic solutions often don’t provide the accuracy and robustness needed for commercial applications, bespoke solutions, while optimized for dedicated hardware, don’t work in other configurations, which means that it’s back to the drawing board for each and every new application.

Slamcore has developed an accurate positioning capability that easily integrates with a wide range of hardware that meets the demands of designers of autonomous machines. Using our algorithms allows anyone looking to add effective positioning capabilities to their devices to do so easily.

Together, stereoscopic cameras provide vast amounts of information about the world around the robot. The trick is detecting and using the right ‘features’ to estimate position quickly and accurately. Selecting a few hundred features, which can be any well-defined point in a scene, be it a corner, an edge, or even a light fixture, enables the creation of a sparse map of points. The distance of each is then precisely calculated by our algorithms to within millimeter accuracy.

Crucially, these calculations happen in real-time using low-cost, low-power processors onboard the autonomous device, ideal for real-world applications where cost, weight, and power consumption are critical constraints.

As wearable technology adopts mixed reality, the requirement for enhanced spatial intelligence becomes even more crucial. End-users of wearable tech need accurate positioning to navigate their environment safely and facilitate the seamless positioning of virtual objects in mixed realities.

Slamcore’s algorithms provide this pose estimation using ultra-lightweight and low-power hardware that can easily incorporate into headsets and other wearable devices suited for long-term use.

Our positioning algorithms are lightweight enough to run on a single Raspberry Pi processor, allowing the creation of sparse maps of spaces at scale as the foundation of Slamcore’s spatial intelligence pyramid. Whether a robot in a warehouse, a drone inspecting a pipeline, or a human wearing a VR headset, accurate calculation of the precise position of that thing or individual is essential.

Once determined, Position makes way for the ‘Map’ and ‘Perceive’ layers of the Slamcore spatial intelligence pyramid. Collectively, providing the advanced capabilities required by an emerging generation of autonomous machines and devices that can transform the many facets of our lives and work.

Our goal at Slamcore is to accelerate the deployment of these solutions in real-world situations by democratising access to the best spatial intelligence possible.