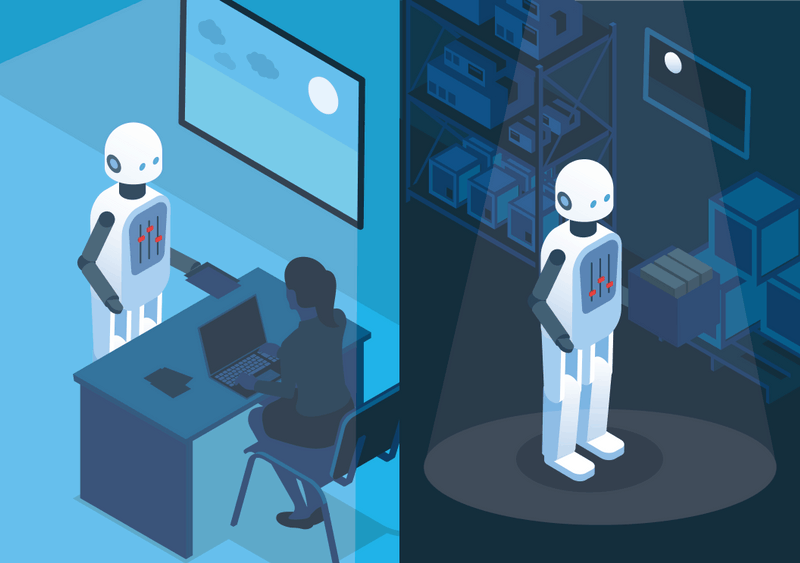

Changes in lighting, large spaces or uniform environments with few distinguishing features can all present significant challenges to robots trying to locate and navigate in real-world situations. Developers are well aware of the ‘Corridor Problem’ in which robots find it hard to locate themselves in environments with lots of identical doorways and aisles and the ‘Warehouse Problem’, where so few measurement points are within range of sensors, it is hard to calculate an accurate position. Vision-based systems generally work better in these scenarios because they ‘see’ far more, and so can capture more features in a scene through which to calculate a position. However, selecting the right parameters is critical to deliver SLAM (simultaneous localization and mapping) algorithms that create robust and accurate location estimates whilst still making efficient use of compute resources.

The problem is with hundreds of settings and thousands of combinations, developers can easily spend months tuning mapping and location systems to perform effectively in any specific scenario. The ‘right’ combination of parameters will vary between situations and use cases. Robots destined to operate in large warehouses will need different SLAM settings than those working in tighter offices or hospital wards with more features, but where objects such as beds and chairs can be moved. Those working outdoors will face a different set of challenges; more variable lighting, more distant landmarks etc, than those designed for indoor use.

Developers have a wide range of options as they select and test the best combination of sensor and algorithm parameters for their robots. Until now, the only approach was trial and error, painstakingly setting each combination and testing its accuracy, normally in lab conditions. Not only is this time consuming and costly, but it also provides little evidence of success once the robot is trialled in real-world situations.

SLAMcore’s upcoming spatial intelligence software release includes features that will radically improve this process. Using our expertise and experience of autonomous mapping and locating robots in real-world situations, we have established a set of customized ‘pre-set’ parameter combinations. Previously we worked with customers to help set the right combination and users were only able to change a few parameters themselves. Now, with the upcoming SLAMcore release, we are presenting a range of parameter presets out of the box supporting a high degree of straightforward user customization.

Developers can now use simple flags and variable settings to toggle between inside and outside setups, between ‘real-time’ location and ‘high accuracy’, or for different environments such as warehouse or office. Each setup gives different weight to factors including feature count to dial up or down the data the software seeks to process in any given scene. For example, working inside it may be enough to identify 100 features in a scene since there are lots of edges, corners, doorways etc. that can be ‘seen’ and used for navigation. Outside this setting may need to be increased if there is more space and fewer identifiable landmarks within the immediate vicinity so more need to be considered in the distance to provide enough options for location estimation.

Many feel that visual-inertial systems are less effective in low light or where lighting is variable. However, with the right combination of parameters, Visual-Inertial SLAM systems are robust and accurate in a wide range of environments. By tuning the parameters for applications as well as environments, developers can start to deliver highly robust solutions. For example, settings can be chosen for high definition in mapping scenarios – helping robots to capture as much information as possible to create detailed reference point-clouds. Parameters can then be reset for computationally efficient, live navigation within those maps as the robot is deployed to go about its functions.

This combination has been proven to be highly robust even when light conditions change dramatically between map-making runs and navigation. This approach also creates robust position estimates that are highly resilient to dynamic objects.

By providing these easy to use presets and variable parameters, we believe we can not only dramatically reduce the trial and error necessary, and thus the time and resources needed, but also increase the robustness, accuracy and efficiency of SLAM. We expect a significant majority of use cases and environments will be successfully managed using our presets, allowing customers to spend more time on edge cases and on the wider challenges of their robot designs. We of course will continue to work closely with customers to get the best out of our software and deliver SLAM that meets the demands of real-world use cases in a cost-effective manner.

Our focus is always on delivering the best possible SLAM solutions to the widest possible section of the market. Parameter setting is complex, time-consuming and hard to get right. By creating these customized parameter presets we can more effectively share our own expertise and experience to save months of developers’ time and open up opportunities for many more robot designers to quickly deploy effective prototypes and production robots that deliver value in real-world situations.

The latest version of our software will be released in the coming weeks.