Adding visual spatial intelligence to existing real-time location systems in logistics and industrial settings could transform safety and efficiency. Visual data provides a common language that can be understood by machines and human operators. By adding cameras or leveraging the functionality of cameras already fitted to autonomous or manually driven vehicles and robots, site operators can benefit from a comprehensive, detailed and accurate real-time map of all vehicle activity within the facility. Thanks to the latest NVIDIA Jetson system-on-modules for edge AI and robotics, visual data can be processed ‘at the edge’ – on the autonomous machine or robot itself – to accurately identify individual things as they move around a warehouse.

Safe, fast and efficient

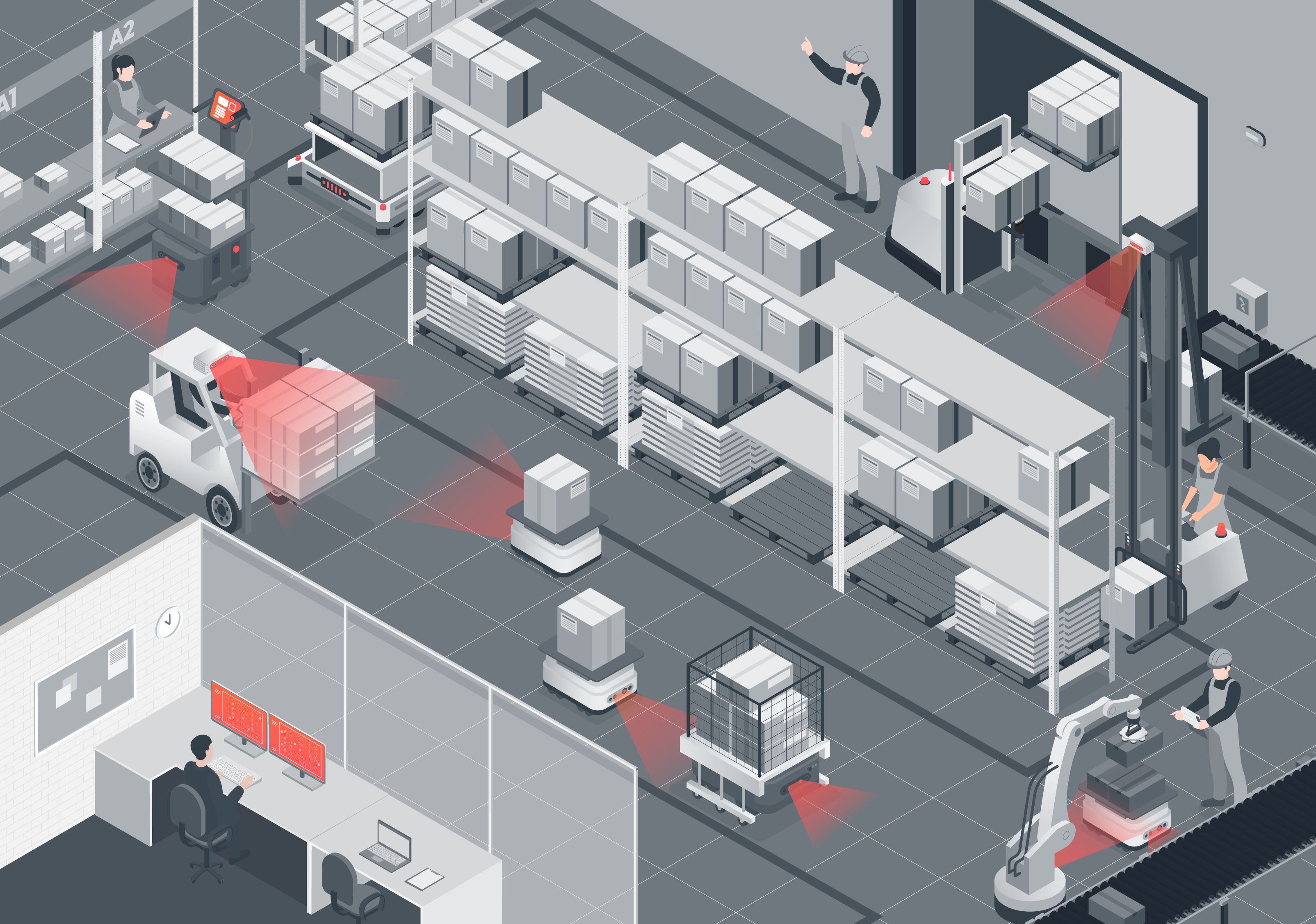

Efficient intralogistics and factory operations are a balance of speed and safety. The faster the throughput of goods through a facility, whether that’s transforming raw materials into finished products, or distributing those products along the supply chain, the more efficient (and profitable) the facility can be. Complex logistics management systems are used to calculate the best routes through and around factories and warehouses. But these environments are constantly changing, and it is often hard to keep track of exactly where everything is: goods, vehicles, people are all in constant motion. Vision provides an additional sensor modality which can help manage these fluid environments. Imagine if every manual vehicle, autonomous guided vehicle (AGV) and autonomous mobile robot (AMR) could ‘see’ and accurately report not only its own position but the location and category of everything it saw!

AI at the edge with NVIDIA Jetson

Slamcore’s visual spatial intelligence algorithms make use of the power of NVIDIA Jetson modules to bring exactly that capability to a wide range of factory and warehouse intralogistics vehicles. Slamcore’s AI runs on any Linux operating system across the full Jetson range of products, including the Nano and the Orin. It delivers positional accuracy of the order of centimetres but just as importantly, the reliability and pose availability is close to 100%. With hundreds of Slamcore’s deployments powered by NVIDIA Jetson in 2023, tens of thousands of real-world kilometres have been clocked to date. Thousands of deployments and hundreds of thousands of real-world kilometres are forecast for 2024, providing further validation that Slamcore software, powered by NVIDIA Jetson hardware, provides scalable value to this growing industry. Slamcore recently graduated from the NVIDIA Inception program for cutting-edge startups and is now part of the NVIDIA Partner Network.

With Slamcore’s neural network AI models processing visual data, any mobile robot can see and differentiate between different things, for example, people, pallets and other vehicles, in real time. Not only can they be programmed to react differently to different objects – for example, slowing down when approaching a person but maintaining speed when passing a pallet – but they can also share this information. If a manually driven forklift ‘sees’ a new consignment of goods placed at the end of an aisle, it can be added to a shared, spatial map of the warehouse. Every other vehicle, both manual and autonomous now knows the location of that new consignment.

Using the ‘Perceive’ semantic segmentation capabilities of Slamcore, accurate categorization of what the object is, can be automatically shared and understood. Perceive also has significant safety and efficiency benefits. Accurately discerning what objects are can help avoid accidents as shared visual data removes blind spots – even if you can’t see another vehicle, someone else can see it, identify what it is and report it to the fleet management system. With shared spatial awareness, it is also easier to spot and plot most efficient routes knowing which assets are in closest proximity to the jobs that need doing.

Retrofit to add vision

The combination of Slamcore’s algorithms and the high performance, low power and small footprint of NVIDIA Jetson modules means that low-cost cameras can be added to most robots, AGVs and AMRs in warehouse and factory environments. Whether ‘designed in’ to new autonomous machines, or retro-fitted as specialized visual-spatial intelligence units added to existing fleets, the Slamcore solution turbocharges real-time location systems to become much richer and more capable.

Today’s warehouses already have a ‘mixed economy’ of autonomous and manual mobile robots. They also have one or more overlapping infrastructure-level solutions and most likely some sort of real-time location system designed to make sense of the environment. All of these systems play a role in the efficient operation of the facility. Adding visual spatial intelligence need not disrupt any of this. If additional cameras are needed, they are low cost and simple to add. The power and price of the NVIDIA Jetson lineup of modules mean that for marginal additional investment, logistics and factory operators can quickly transform the effectiveness of not only their intralogistics fleets, but their entire operations.

To find out more, get in touch or visit us at Manifest or Modex later this year.