There are more and more robots operating alongside people in many different environments around the world. Some are beginning to incorporate sophisticated Simultaneous Localization and Mapping (SLAM) functions to underpin true autonomy. But, getting SLAM right, as with many elements of robotics, remains extremely difficult. Getting it to work reliably in the real world with its ever-changing environments and conditions is even harder. It can take millions of dollars and months of painstaking trial and error experiments to prepare an autonomous robot to locate and navigate in even a relatively uniform environment. Getting more robots out of the lab and deployed to take on real tasks in environments built for humans, requires accuracy, computational efficiency and lower-cost solutions.

Open-source software is the foundation of robotics. It allows teams to experiment, design and prototype robots in the lab relatively quickly and cost effectively. As more designers use it and feedback code, the open-source libraries grow more comprehensive. It is a vital part of the ecosystem. At the other end of the spectrum you have bespoke code, created for a specific function within a specific robot completing a specific task. It can be extremely effective and viable for that robot but is an expensive solution that is difficult if not impossible to reuse with different hardware and sensors in a different robot tasked with different roles.

To take the next leap forward, the robotics industry needs software that is reliable and effective in the real-world, yet flexible and cost effective to integrate into a wider range of robot platforms and optimized to make efficient use of limited compute, power and memory resources. Creating ‘commercial-grade’ software that is robust enough to be deployed in thousands of robots in the real world, at prices that make that scale achievable, is the next challenge for the industry.

In hardware, and processors in particular, the industry is already coalescing around a small number of devices created for the edge processing demands of autonomous systems including robots. Arm reference designs are quickly becoming the de-facto standard with chip manufacturers such as NVIDIA, Qualcomm, Mediatech and Texas Instruments creating a range of options perfectly tuned to the demands of robotics. But with that performance, software needs to be careful and consistent with the demands made on processors. Embedded processors must run numerous tasks simultaneously with finite resources. Ensuring the maximum efficiency of each, whilst minimizing the number of cores and memory needed, is fundamental to creating robots that are not only highly capable, but affordable.

Don’t overlook SLAM efficiency

SLAM is just one element of the overall autonomy stack but provides an excellent illustration of the importance of paying close attention to the demands any algorithms place on processors and memory. Designing and testing in the lab, or even within a controlled ‘real-world’ environment it is easy to overlook the efficiency of SLAM systems’ use of resources. Individual systems are often tested in isolation, so if the SLAM software suddenly started to eat up 2 or 3 times as much compute resource it could go unnoticed. Only when other systems are simultaneously relying on the same microprocessor does it present a problem. An easy solution is to add more processor cores, but in commercial deployments this adds significant cost to every robot severely limiting commercial viability.

Our research and bench testing has shown that many open source and even some commercial, SLAM algorithms not only make high demands on both compute and memory resources, but they are highly unpredictable. These peaks and large magnitude swings in processor and memory requirements are hard for developers to design around. Designing for the average demand runs the risk that the peaks could overwhelm the processor, prevent other tasks from being accomplished, or failure to complete SLAM estimations in a timely fashion. To avoid this, developers are forced to spec processor requirements based on the peak demands with the result that they end up paying more for silicon than they need.

Accurate, Robust and Commercially Viable

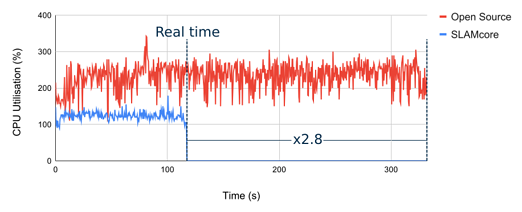

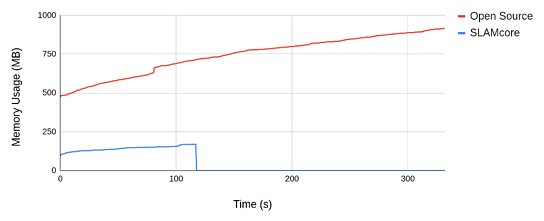

The graphs here illustrate the resource requirements of SLAMcore’s software compared to a recent state-of-the-art, open-source alternative. Both sets of software are running in the ‘out-of-the-box’ state with no additional specific tuning. Both are running on the same system with Arm v8.2 architecture processors, taking data from the same sensor set-up with stereo RGB cameras and an inertial measurement unit (IMU).

The plots show the processor load and memory usage running on the same data. It is clear both average and maximum demands on processors are higher with open-source software. The variance between peaks and average processor use are also higher, making it harder to estimate demand. The same is also true for memory. As the graph shows, the open-source system rapidly makes much higher demand on memory resources as the map is computed. More efficient management of memory reduces the overall demand for memory in the robot – again saving cost. More memory-efficient maps are also processed faster leading to more accurate SLAM.

In fact, the efficiency of the software means that more data is processed faster with the same resource. The graphs show that the open-source software is not able to process the data in real-time. It actually runs 2.8 times slower than real-time so that means when running live, nearly two thirds of the sensor data will be dropped. This will impact accuracy and reliability significantly.

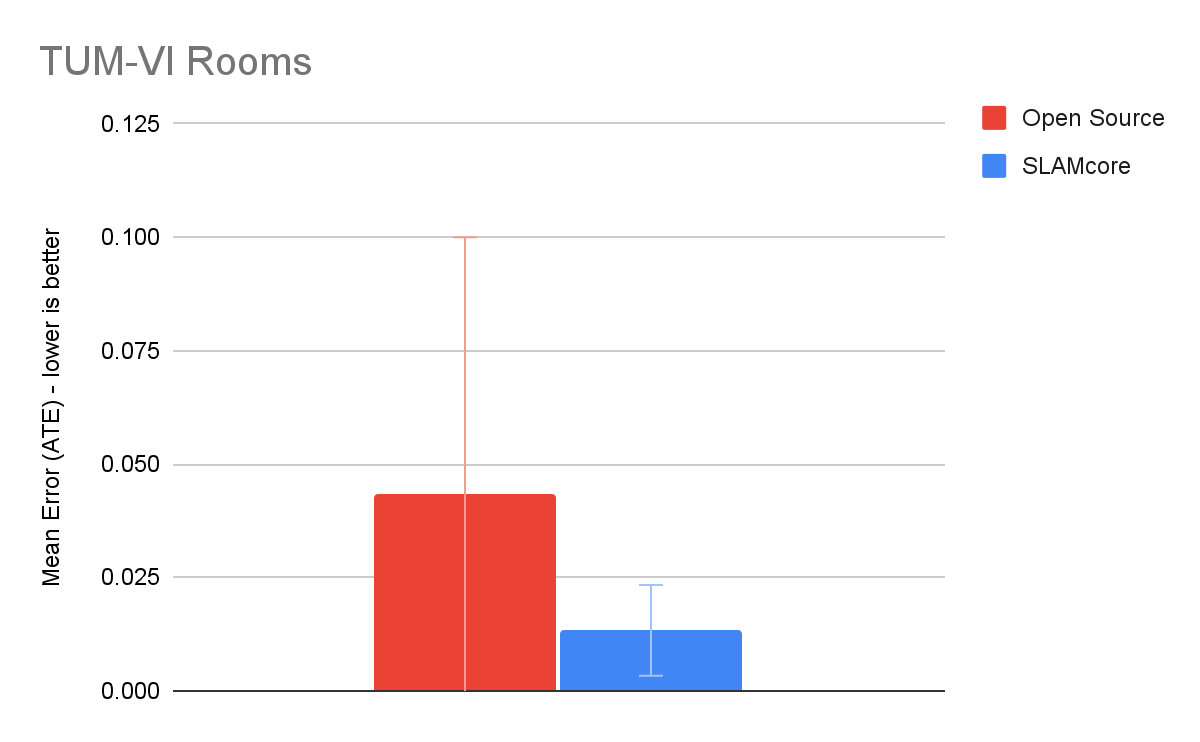

Similar differences can be seen in measures of accuracy shown in this graph comparing open- source to SLAMcore algorithms. Once again, exactly the same data from the same robot, mapping the same office environment. Not only does the open-source solution deliver errors of greater magnitude (0.04 metres compared to just 0.015m) but the variation is again lower suggesting greater predictability and robustness of performance.

These differences are important. Consistent and computationally efficient software makes it much easier for designers to predict the correct amount of compute resources needed for effective SLAM. Accuracy and real-time performance are critical for any commercially deployable solution.

Deployment at Speed and Scale

As more and more robots are fulfilling important roles in the world around us, finding effective and affordable combinations of hardware and software will be essential for commercially viable deployments. Open source is often the initial move. It is free, or low cost, easily obtained and implemented and thus can short-cut prototype and proof of concept work. But as robots leave the lab the limitations of open source can become apparent. Frequent redesign, test and implementation of software to cope with new situations, additional sensors or environments not only add work, but can increase load on memory and compute. This in turn can necessitate changes in choice of silicon adding more unforeseen cost. What worked as a limited proof of concept in the lab suddenly becomes an expensive dead-end as the realities of real-world commercial deployment hit.

To break out of the proof-of-concept trap, and massively democratize access to robots that help with the important roles we know they can fulfil, the industry needs robust, repeatable, accurate and effective solutions. The complexity of robot design means that only the largest and best funded organisations can afford to employ the expertise needed to crack all the different elements simultaneously. For the rest, we need to cultivate a supply chain of specialists who can collaborate to deliver key systems that work together. This vibrant ecosystem of robotics innovators will support the broad range of commercial grade robotics solutions that graduate from the lab to the real world.