Inspired by the great work done by our colleague Frank Dellaert (and continued by Mark Boss) about an interesting series of blogs showing the explosion of Neural Radiance Fields (NeRFs) in computer vision conferences, we have decided to do something similar about visual inertial SLAM (and beyond). While visual inertial SLAM is a much more mature technology than NeRF, there are still many problems to be solved for efficient visual inertial SLAM systems that can provide real-time accurate positioning and mapping running on platforms with limited computational resources in challenging scenarios. Visual Inertial SLAM has tremendous applications in different areas like mixed reality, robotics and autonomous driving.

Slamcore has begun collating a range of papers and research articles for those concerned with visual inertial SLAM and beyond. Summaries have been developed based on paper/abstract availability. If a paper has been mischaracterized or missed, please email marketing@slamcore.com.

Note: If included in this post, images belong to the cited papers – copyright belongs to the authors or the organization responsible for publishing the respective paper.

Slamcore attended IROS 2022. The conference was celebrated in the beautiful city of Kyoto in Japan. We have clustered the most relevant papers related to visual SLAM into different categories.

Visual SLAM in Dynamic Environments

One of the remaining big problems in visual SLAM is dealing with dynamic environments from both positioning and mapping perspectives.

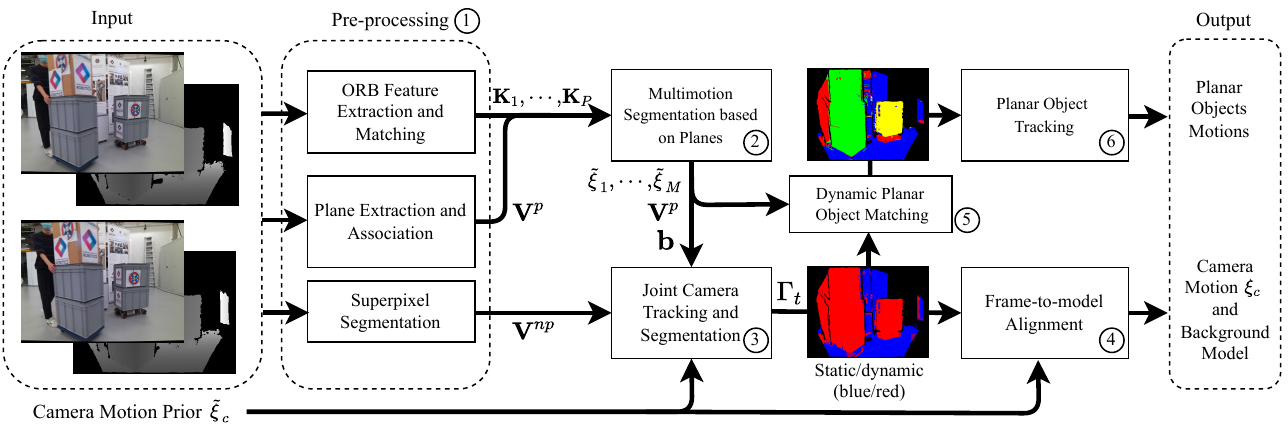

Long et al. presented an interesting method for RGB-D SLAM in dynamic environments that enables multi-object tracking, camera localisation and mapping of the static elements of the scene. The approach is based on a decomposition of the RGB-D image into planes and superpixels plus ORB features for tracking.

S3SLAM builds on top of ORB-SLAM2 and performs panoptic segmentation to label 3D landmarks with particular object instances. The authors propose a novel modification to Bundle Adjustment that incorporates structural constraints per object in the form of planes, which shows improved localization results.

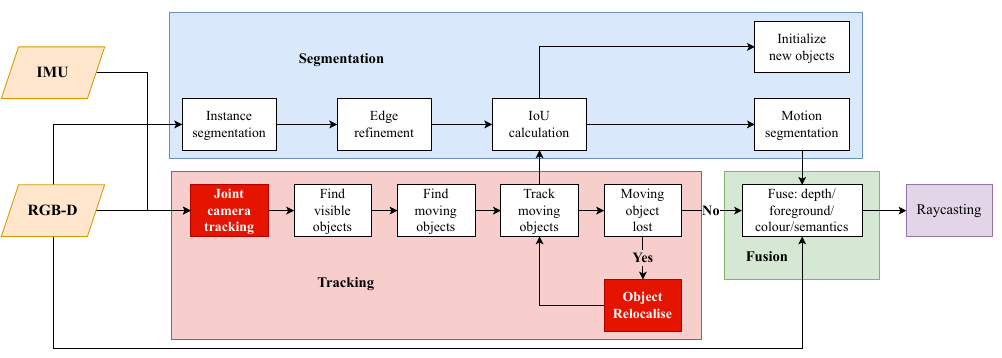

VI-MID extends MID-FUSION by incorporating IMU and object-level information into a tightly-coupled dense RGB-D visual inertial SLAM system. The paper also introduces a novel object re-localisation method (based on BRISK features) to recover moving objects that disappear/reappear in the camera view.

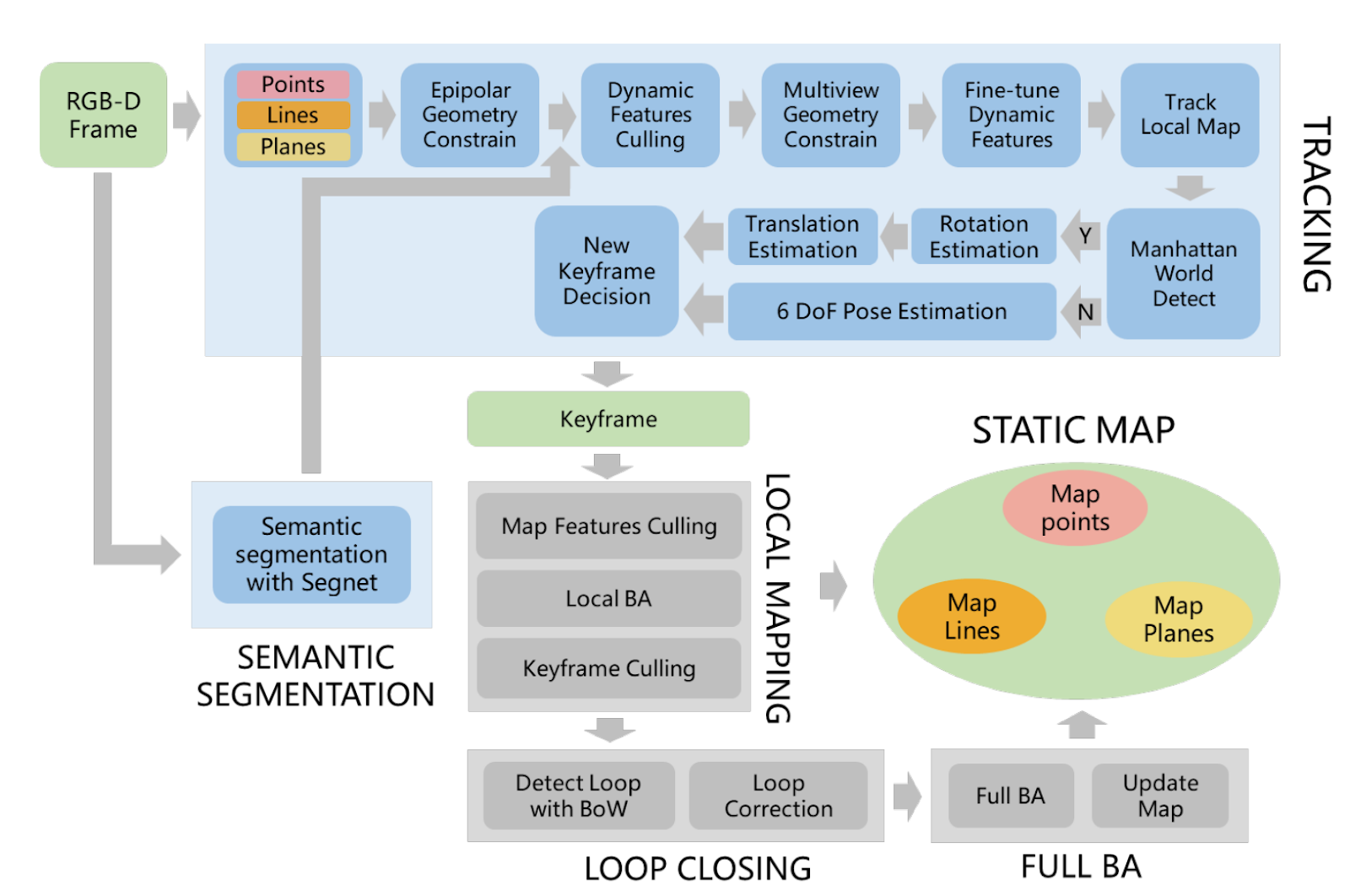

DRG-SLAM: A Semantic RGB-D SLAM using Geometric Features for Indoor Dynamic Scene(Wang et al.) exploits point, line, and plane features for extra robustness adding also Manhattan world constraints for the plane’s measurements. It uses semantic segmentation and epipolar constraints for filtering out the dynamic features.

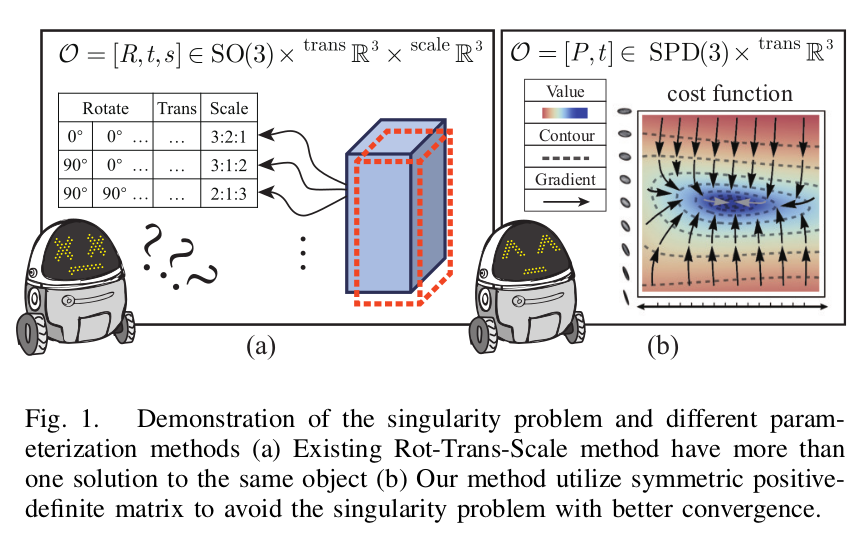

Hu et al. addresses the singularity between rotation and scale in the object representation for object-level SLAM by integrating symmetric positive definite (SPD) matrix manifold optimization into a non-linear least squares SLAM optimization framework that represents objects based on ellipsoids.

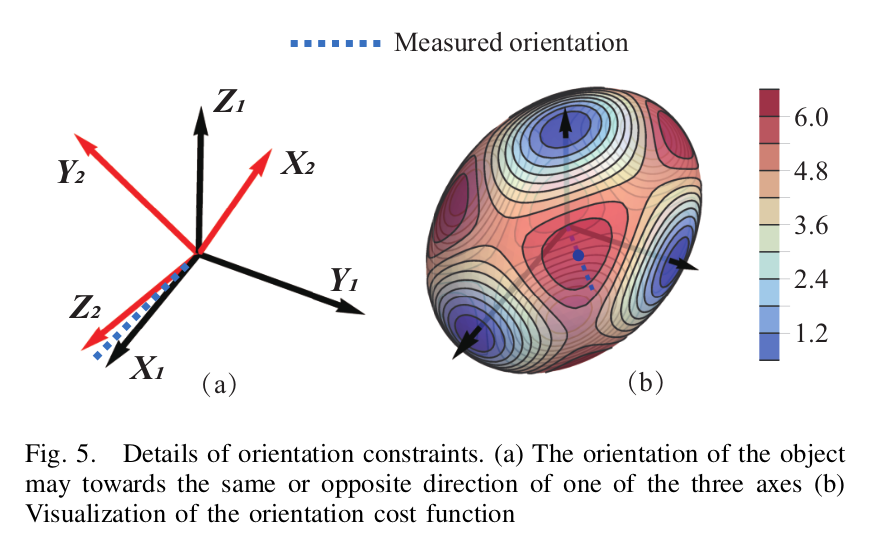

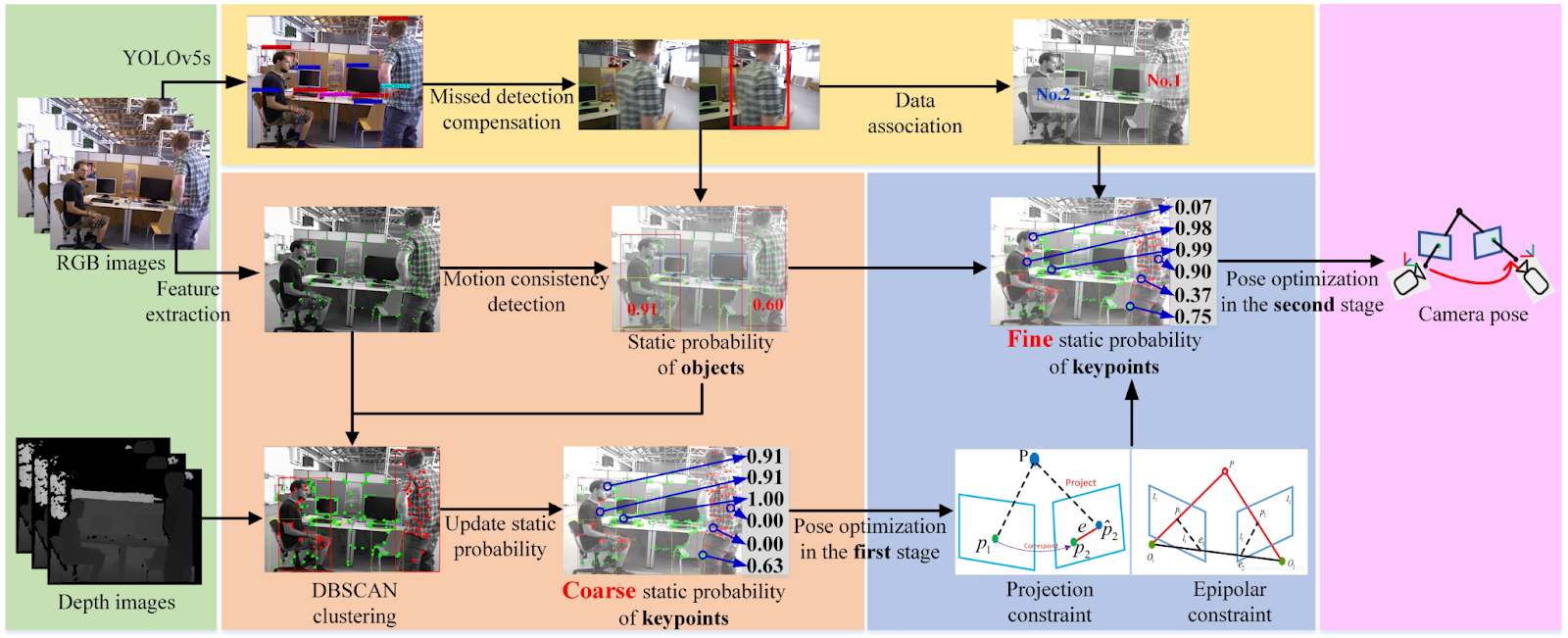

CFP-SLAM exploits object-detection instead of semantic segmentation for real-time RGB-D SLAM in dynamic environments. This paper takes a coarse-to-fine approach for computing static/dynamic probability of keypoints.

Adkins et al. proposed the concept of Probabilistic Object Maps (POMs) that represents the distributions of movable objects using pose-likelihood sample pairs from prior trajectories. Gaussian processes are used to estimate the likelihood of an object at a given pose and this information is used for improving the localization. The authors validated their approach using LiDAR data but POMs can also be used with visual SLAM methods.

Visual SLAM with Geometric Priors

Many robotic applications exploit structural information to increase robustness, localization and/or mapping quality.

IMU preintegration for 2D SLAM problems using Lie Groups (Geer et al.) proposes an IMU pre-integration method for mobile robots where SLAM is constrained to a 2D plane. The IMU pre-integration method is based on Lie algebra and the authors show the derivation of the incremental pre-integration in the Lie group alongside the Jacobian computation.

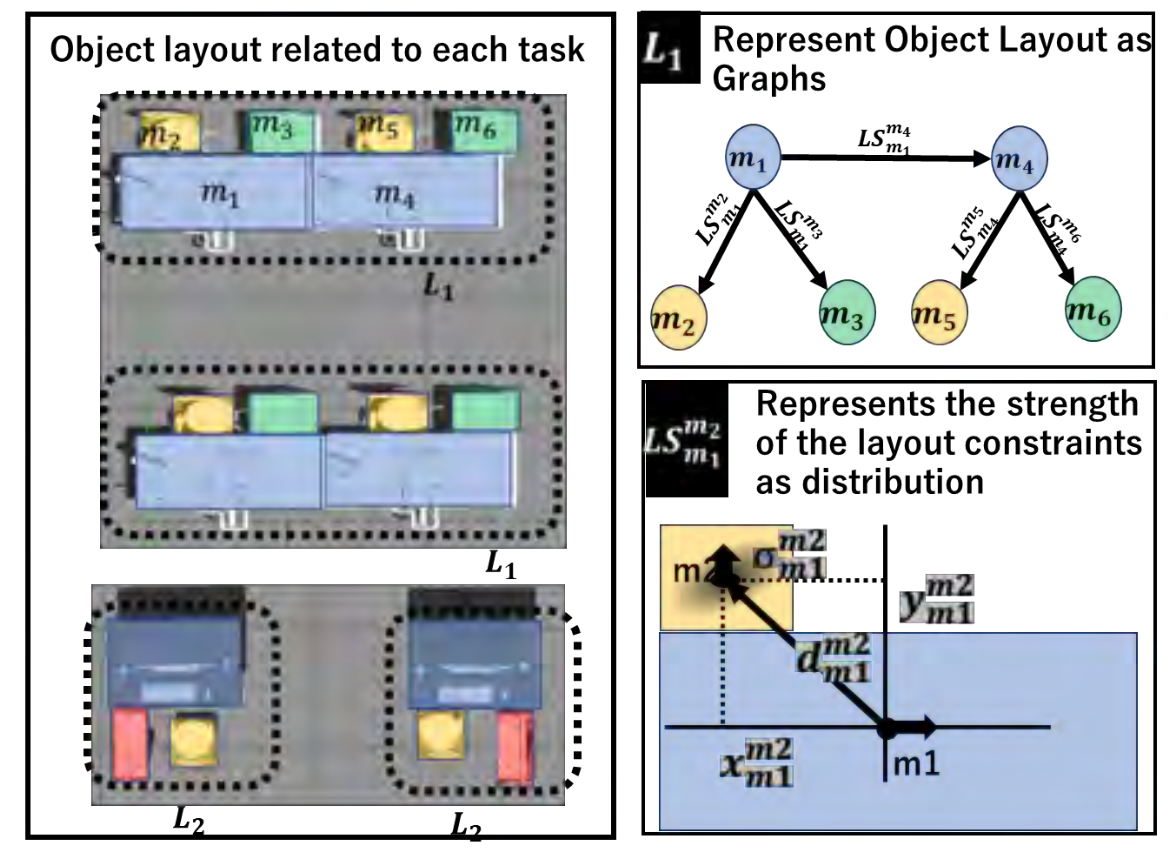

LayoutSLAM: Object Layout based Simultaneous Localization and Mapping for Reducing Object Map Distortion (Gunji et al.) exploits layout information for object mapping in crowded workspaces. The object layout is optimized in a graph-based SLAM framework, where the nodes correspond to the objects and the weights of the links represent the strength of the layout.

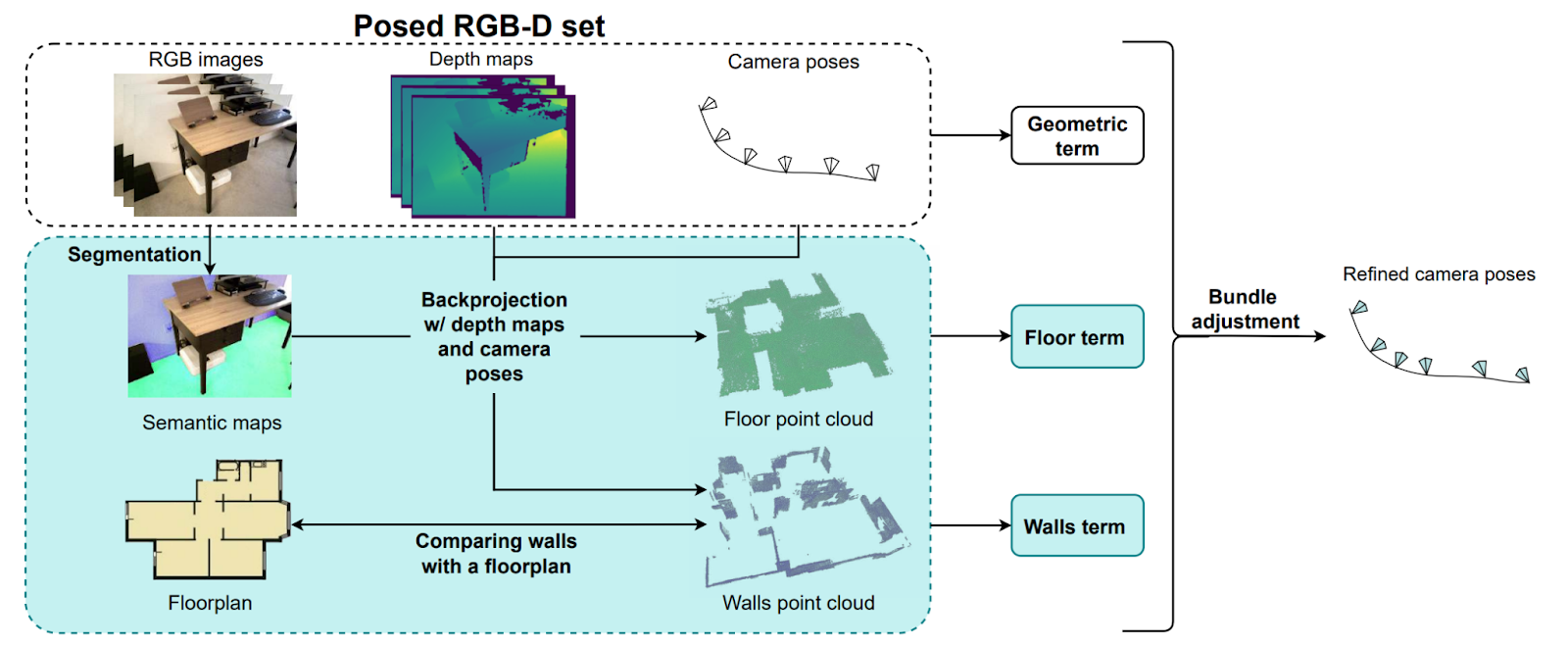

Sokolova et al. used floorplan and semantic segmentation (floor and walls) for robust camera pose estimation and mapping using RGB-D data. They predict floor and walls from RGB images and use the depth and floorplan information to create error terms for the floor and walls. The error terms use point-to-point and point-to-plane distances and are refined in a bundle adjustment optimization.

Loop Closure Detection

Loop closure detection is a critical component of SLAM systems since it allows using information from long-term data association to reduce the accumulated drift. However, it is important to have a robust loop closure detection and a robust pose graph optimization to mitigate the impact of potential outliers.

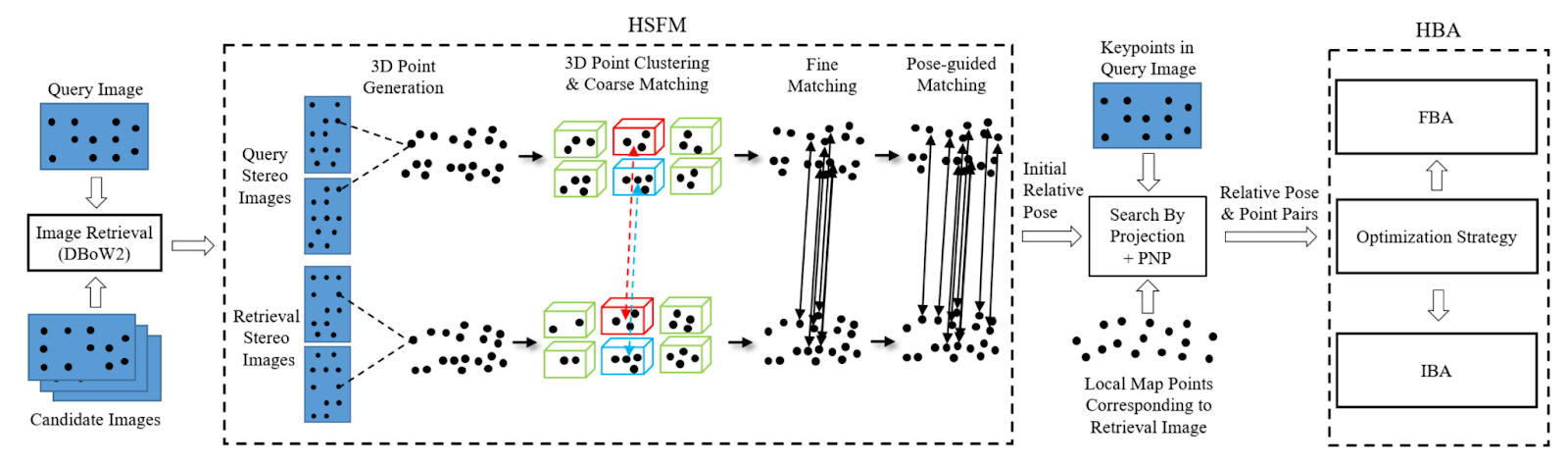

DH-LC: Hierarchical Matching and Hybrid Bundle Adjustment Towards Accurate and Robust Loop Closure (Peng et al.)takes a coarse-to-fine approach based on the 3D points from the stereo images of loop closure candidates for robust keypoint matching. In addition, they proposed an efficient version of bundle adjustment that combines incremental or full BA optimization steps depending on the accumulated drift.

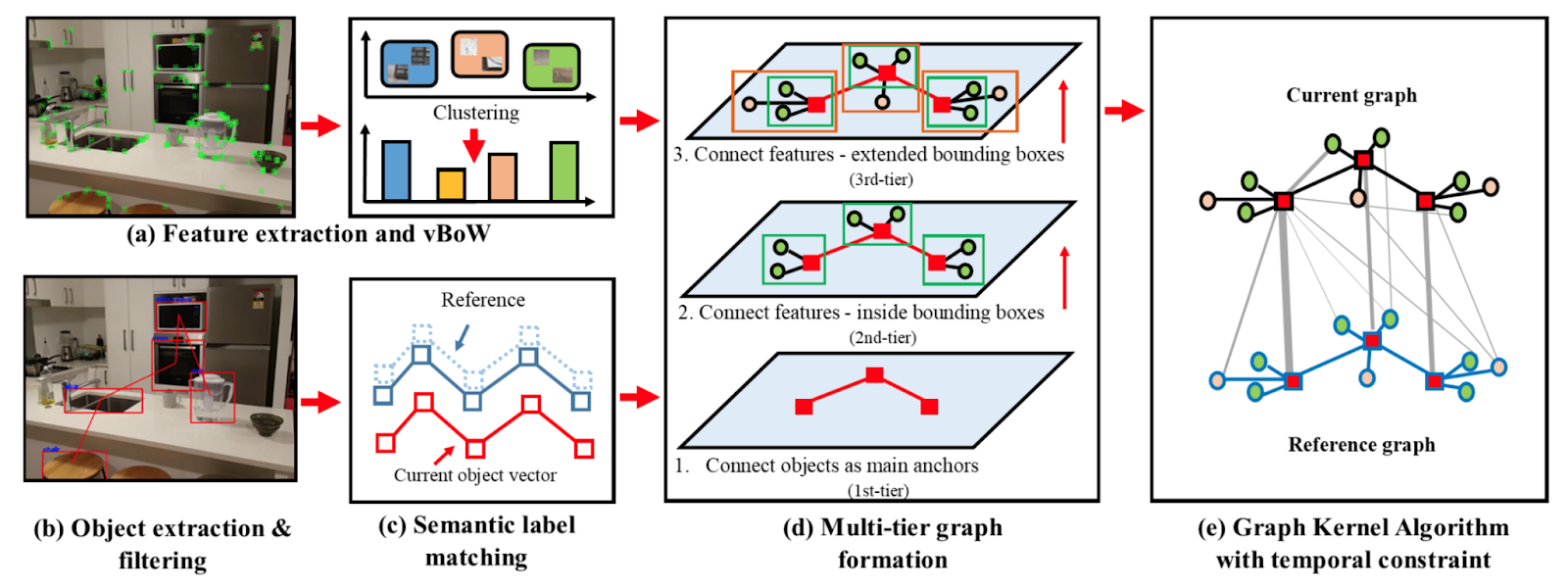

The work of Kim et al. performs robust loop closure detection by combining visual features and semantic information into a unified graph structure. Loop closures are identified by using a Graph Neural Network (GNN) based approach that specializes in temporal subgraphs.

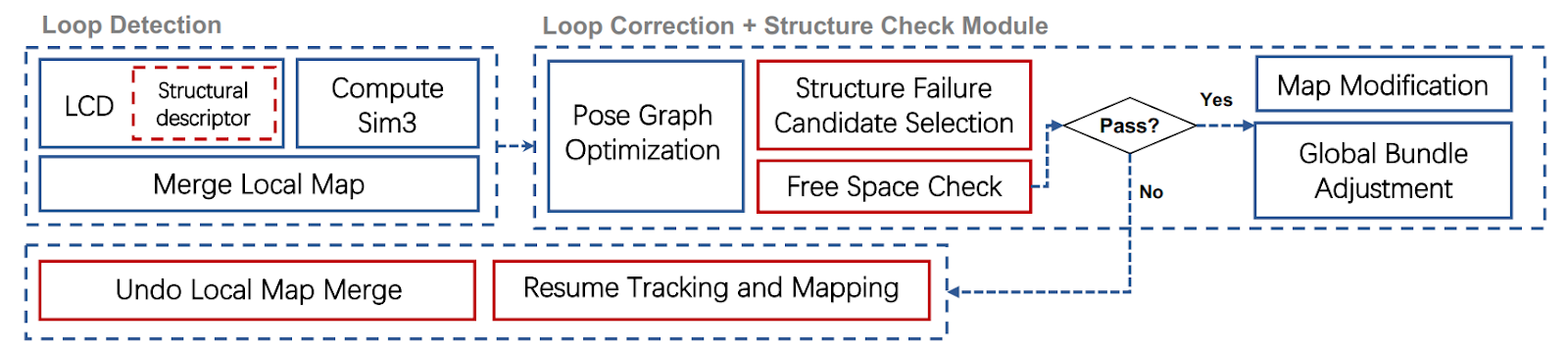

Deng et al. also used a graph structure for relocalization that combines objects and planes for pose estimation. Xie et al. introduced a method for structure representation in visual SLAM using sparse point clouds based on spherical harmonics (SH). A structural check is performed after pose graph optimisation that helps discard erroneous loop closure hypothesis.

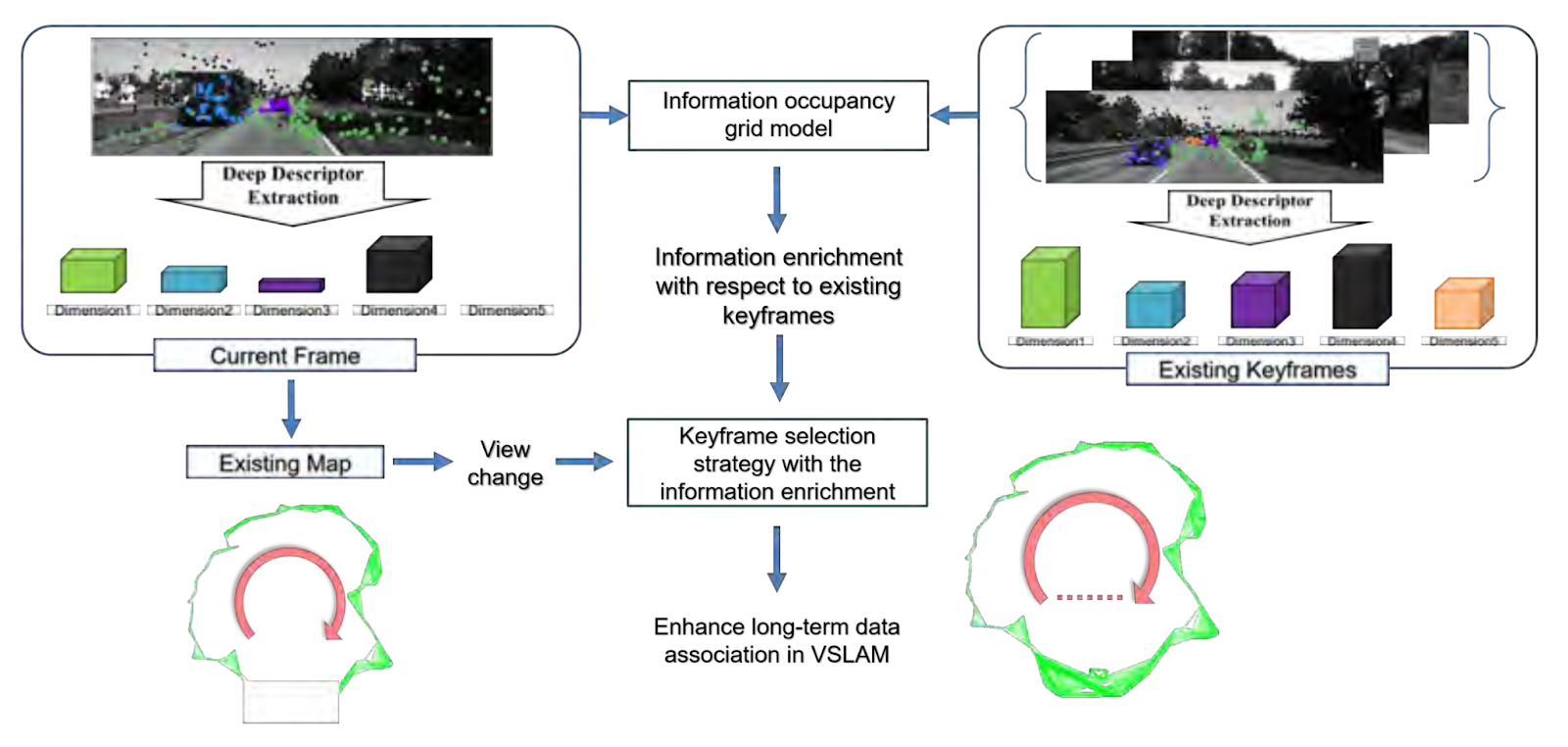

Typical keyframe-based visual SLAM systems add keyframes from a short-term tracking perspective (e.g. avoid losing tracking in a local sliding window). On the other hand, Keyframe Selection with Information Occupancy Grid Model for Long-term Data Association (Chen et al.) introduces a keyframe selection criteria based that combines information from an occupancy grid model and deep features. This keyframe insertion criteria enhances long-term data association (potential loop closures) by selecting texture-rich keyframes.

Pose Graph Optimization

In a SLAM pose graph the robot’s trajectory is modeled in a factor graph with nodes being the poses of the robots and edges corresponding to different measurements including loop closures. Pose Graph Optimization (PGO) methods are based on a variety of optimization techniques that minimize/maximize a cost function using the information from the nodes and edges in the graph, typically using some robustness against outliers. It is particularly critical for long-life scenarios under memory or computation constraints where the robot must be able to determine which information should be preserved versus what can safely be forgotten.

Doherty et al. proposed a spectral measurement sparsification for pose graph SLAM that is based on a convex relaxation and is computationally inexpensive. Tazaki exploits a minimum spanning tree approach for hierarchical pose graph representation. Yongbo et al. proposed an anchor selection method based on graph topology and submodular optimization.

Multi-Agent SLAM

Collaborative multi-agent SLAM is a key part of real-world robotic applications (e.g. warehouses) where each robot shares information with its neighbors about its localization and mapping.

A typical scenario is that the robots send their observations to a server and a shared geometric model is reconstructed in a distributed manner. Tian et al. proposed Lazily Aggregated Reduced Preconditioned Gradient (LARPG) with the idea of reducing the communication load between the robots and the server while keeping the accuracy of the geometric model. The idea is for agents to selectively upload a subset of local information that is useful for global optimization.

Polizzi et al. presented a novel approach for decentralized state estimation using thermal images and inertial measurements. Online photometric calibration is performed on the thermal images to improve feature tracking and place recognition. The communication pipeline uses very efficient binary VLAD descriptors for low bandwidth usage. The source code and datasets are available from the project website.

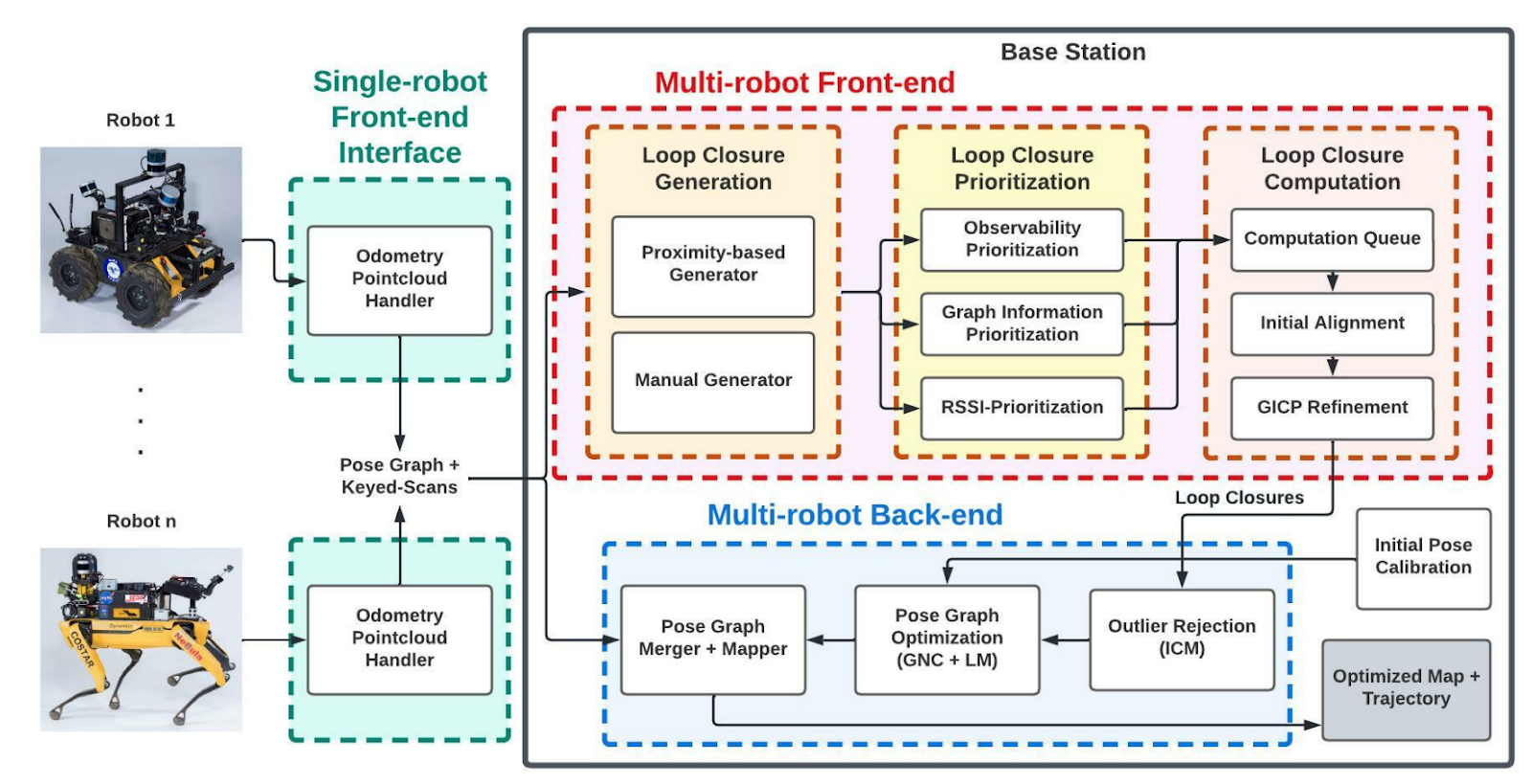

LAMP 2.0 showed a multi-robot system working in large scale underground environments such as the ones from the DARPA subterranean challenge. Information from multiple robots is merged in a multi-robot backend that incorporates loop closure prioritization module that uses a GNN to predict the outcome of a pose graph optimization triggered by a new loop closure.

Other

Hug et al. proposed a continuous-time stereo visual inertial odometry system that uses splines. The authors demonstrate that their continuous-time system shows competitive accuracy compared to discrete-time SLAM methods on standard benchmarks. Furthermore, the authors released an open source implementation of their method, which is great since there is a lack of available continuous-time open source implementations in the robotics community.

Park and Bae presented a method to perform point sparsification for feature-based visual SLAM methods. Their approach is based on a maximum pose-visibility and maximum spatial diversity problem solved with minimum-cost maximum-flow graph optimization. The experimental results show that comparable or even better localization accuracy can be achieved with ⅓ of the original map points and ½ of computation.

Qadri et al. presented InCOpt: Incremental Constrained Optimization using the Bayes Tree. This method addressed the problem of incrementally solving constrained non-linear optimization problems formulated as factor graphs. The method uses an augmented Lagrangian-based incremental constrained optimizer that views matrix operations as messages passing over the Bayes tree. An implementation of InCOpt is available here.

We hope you have enjoyed reading this blog. We will soon publish our next blog about visual inertial SLAM (and beyond) highlights from ECCV 2022 and we are sure we will be talking more about synergies between SLAM and NeRFs there!