The coming decade will see an exponential increase in robots helping humans in their everyday lives. But applications ranging from transportation (self-driving cars), security (smart homes), to daily chores (lawn mowers) require better understanding of the physical world around them. Currently, this comes at a computational, and financial cost.

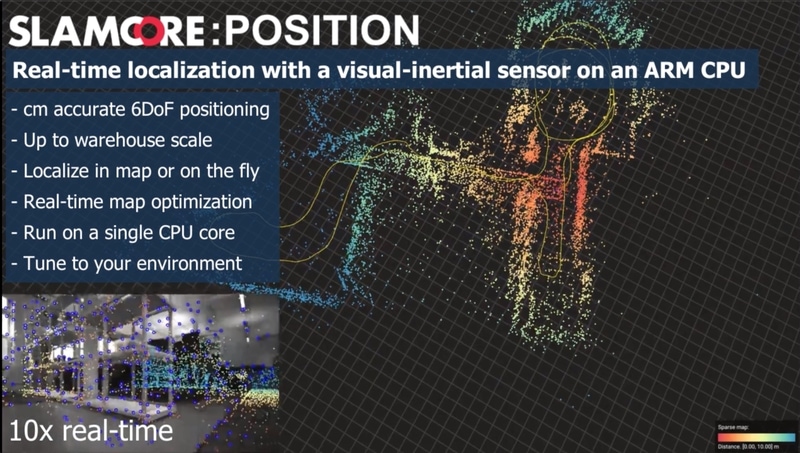

At SLAMcore, we have been working on addressing different levels of spatial AI. SLAMcore:Position is a visual inertial simultaneous localization and mapping (VI-SLAM) solution that gives robots the ability to safely navigate in new and complex environments like warehouses in real time using commodity chipsets like Jetson Tegra TX2, and Raspberry Pi. However, to truly interact with their environment, robots need a higher understanding of what’s around them.

Discerning pixels

All a robot ‘sees’ are pixels – raw data from cameras. To make sense of a scene a robot needs to be able to discern what category every pixel represents and how many different objects are present. It needs to know the difference between ‘countable’ objects (people, chairs, cars, etc.) and ‘uncountable’ regions (ceiling, walls, vegetation, etc.). Categorising these is known as panoptic segmentation and currently it requires two neural networks (or a single network with multiple branches) each consuming significant computing resources. With the exception of high-powered robots like self-driving cars, doing this in commodity robots is still very challenging.

The main reason panoptic segmentation needs so much computational power is that existing methods use a two-stage process. First, Semantic Segmentation identifies the category of each pixel while Instance Segmentation groups pixel regions into individual object instances. The results of these separate tasks must then be merged to obtain the final prediction. So, every pixel is analysed once by one neural network (or branch) to predict its semantic category, and then multiple times by a separate neural network (or branch) to see which pixels collectively represent a specific object instance.

This grouping of pixels into individual object instances is done by creating ‘bounding-boxes’ and assessing the probability that an object exists within the bounding box. To recognise all object instances that are in a scene, a robot must repeat this process with a multitude of bounding-boxes of varying sizes and shapes to represent different objects in the image. You can understand how this process requires huge amounts of computing resources.

Single Step Process

At SLAMcore, we believe that this approach is unnatural, counter intuitive and unnecessary. Semantic content already categorizes all pixels in the scene, so how can we use this data to simultaneously group pixels of countable objects into individual instances of the object?

To do this we have developed a method to extract instance information using only the semantic content. By adding a prediction of the centre of each object and each pixel’s distance from the object edge, pixels belonging to countable objects are automatically grouped into individual instances. Doing this marginally increases the compute resources required by the first neural network, and completely does away with the instance segmentation neural network.

Efficient and cost-effective

Our method is computationally efficient compared to previous methods with comparable performance. You can see it in action in a dynamic environment in this video with lots of people moving in a busy train station.

We also have a video of panoptic segmentation working on the popular Cityscapes autonomous driving dataset.

More technical details can be found in the associated paper.

The real value of this efficiency is in reducing dependence on expensive, bespoke processors with all the energy, cooling and memory resources that come with them. By reducing the computational overhead required for semantic understanding, SLAMcore is helping robot developers to do more with lower-cost components. This, in turn, opens the door to more developers and helps reduce the overall cost of developing and building useful robots.

We are committed to building an ecosystem that can develop and deliver the robots that will help humanity tackle and overcome pressing challenges. If you are interested in working with us, or would like to join our Visionaries Programme, please get in touch.

Associated publication

This blog was co-authored by Dr Pablo F Alcantarilla. The associated paper was co-authored by Dr Ujwal Bonde, Dr Pablo F Alcantarilla and Dr Stefan Leutenegger. Read the paper here: http://arxiv.org/abs/2002.07705